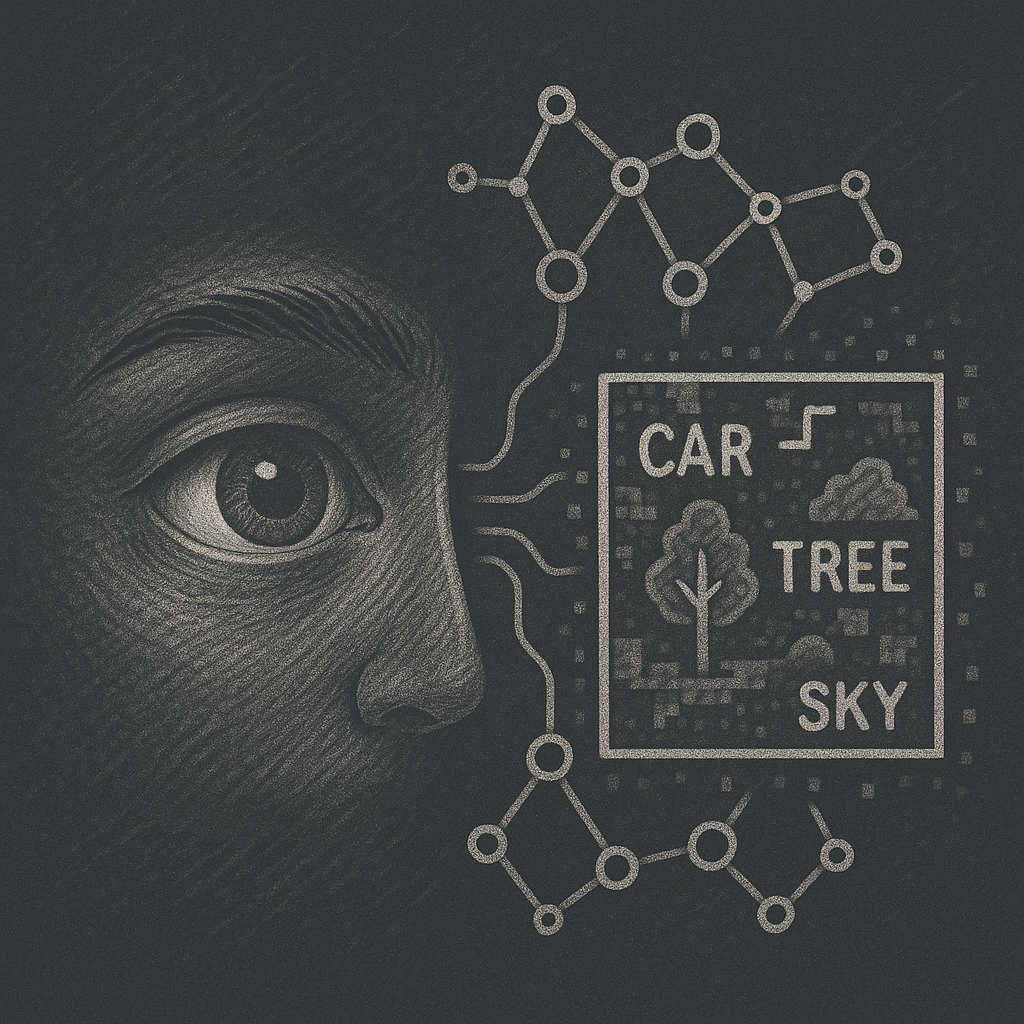

How AI Sees the World

Super Simple Summary

A beginner’s journey into computer vision using Azure AI—exploring how machines analyze, tag, and understand images, with hands-on experience and practical insights.

Spoiler alert: It doesn’t blink, but it learns.

A few weeks ago, I wouldn’t have guessed I’d be spending my Sunday teaching an AI model how to look at pictures—and make sense of them. But here we are. I’ve officially completed the “Analyze Images” module in Microsoft’s AI Engineer career path, and I’m buzzing with excitement.

Let’s talk about how computers “see”—and what I learned along the way.

🚀 My Mission: Analyze Images with Azure AI Vision

This was the first hands-on step in the Create Computer Vision Solutions in Azure AI learning path. In the simplest terms, I learned how to use Azure’s AI services to teach a computer to look at an image and answer:

What’s in this picture?

Think:

- “That’s a cat.”

- “There’s a tree, and it’s snowing.”

- “This image contains a logo.”

It’s called image analysis, and it’s surprisingly powerful—and kind of magical.

🔍 What the Module Covers

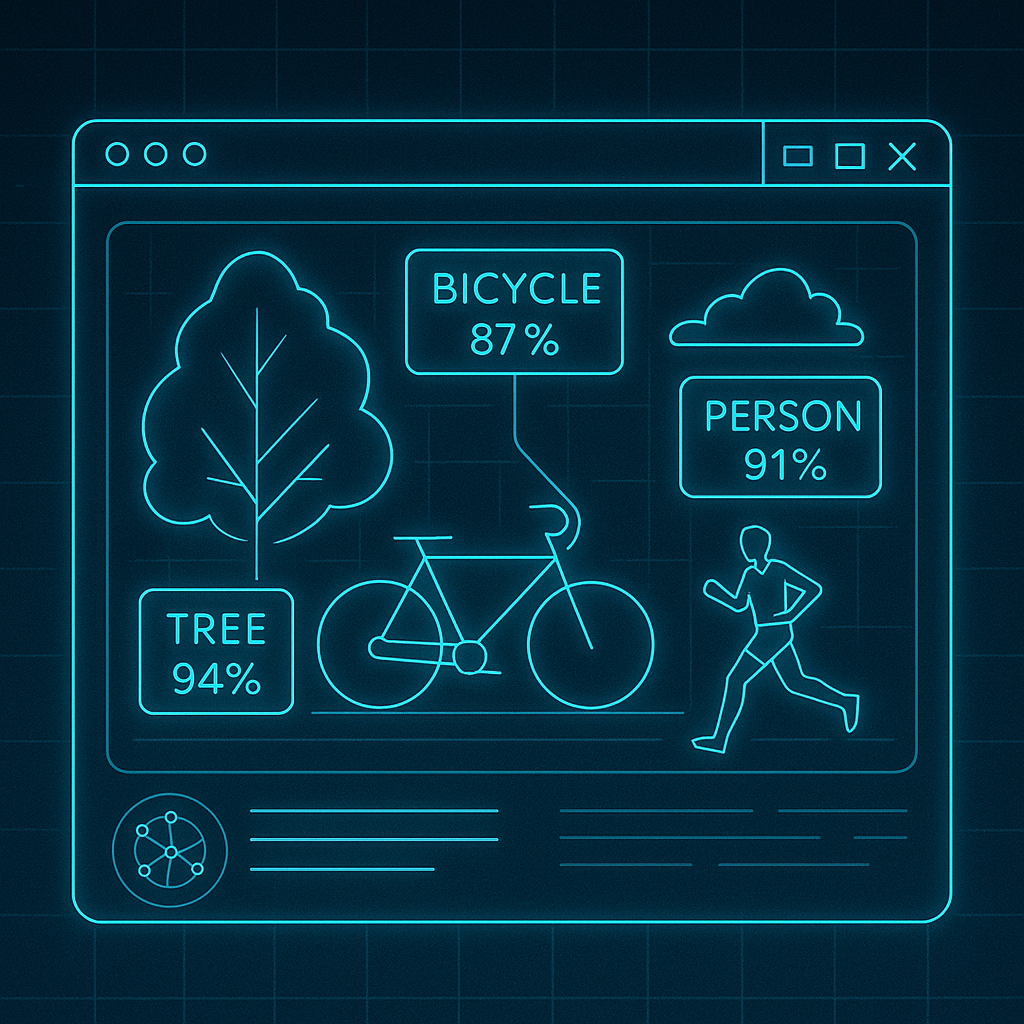

The module focused on using the Azure AI Vision API, which helps developers:

- Tag and describe images (objects, scenery, actions)

- Detect text (hello, OCR!)

- Identify adult/racy content (for safety filtering)

- Generate confidence scores (how sure the AI is)

All of this can be done using just a few lines of code—or even via a no-code playground!

🧪 My Hands-On Experience

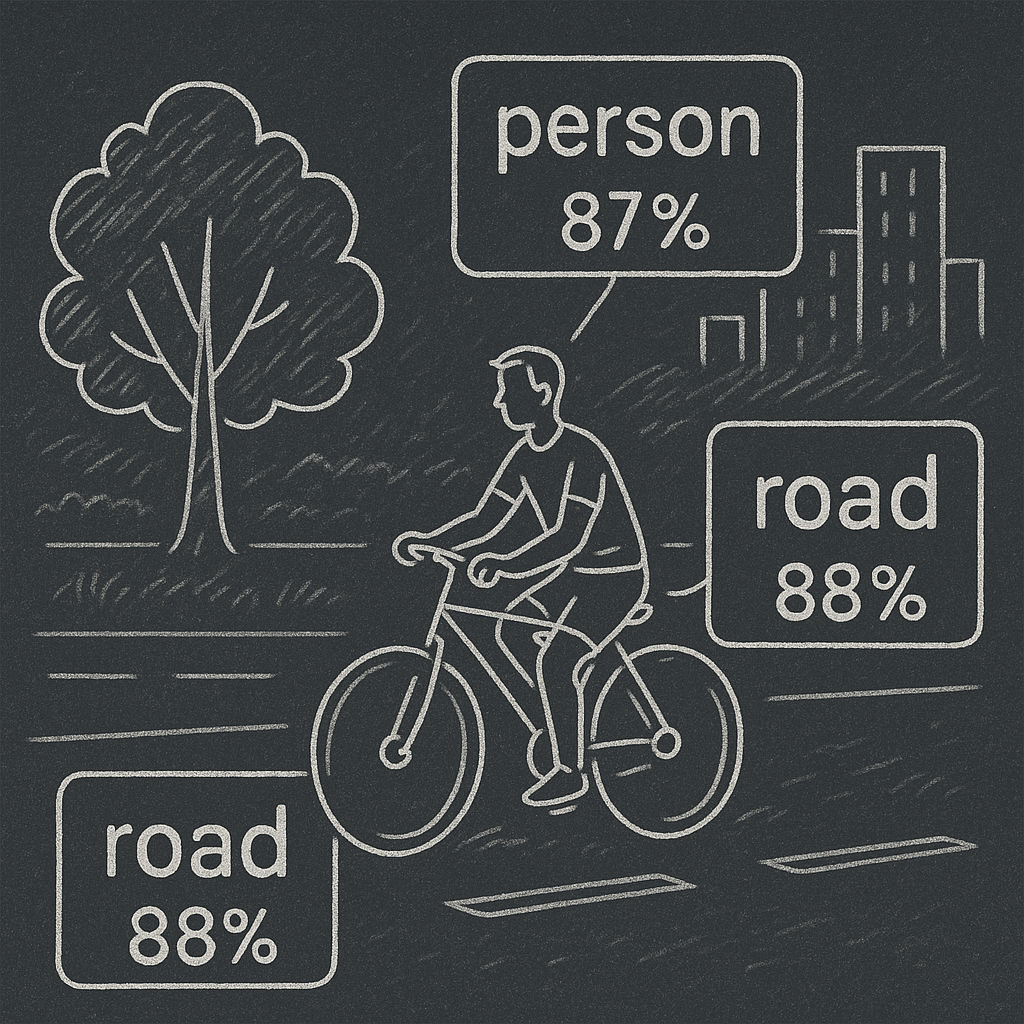

Here’s what I did step-by-step:

- Uploaded images into the Azure AI Vision Studio

- Ran image analysis with the “analyze image” option

- Read the results, including tags like:

“outdoor”,“bicycle”,“person”,“road”- With confidence levels like:

94.2%,87.5%, etc.

- Tried the OCR feature (Optical Character Recognition) to extract text from images

Tech used: Azure Vision Studio + optional Python snippets

It honestly felt like showing a robot a photo album and asking it, “What do you see?”

And then being impressed when it got it mostly right!

🤔 What Surprised Me

- Accuracy: It recognized items like glasses, buildings, signs—even in weird lighting.

- Speed: Results came back in less than a second.

- Confidence Scores: I love that it tells you how sure it is about each tag. It’s not pretending to be perfect.

🧠 What I Learned (And You Can Too)

- AI “sees” differently than we do—it relies on patterns, pixels, and pre-trained models.

- You don’t need a PhD to get started. Azure’s tools make this accessible to beginners.

- Vision services are everywhere: in phone cameras, accessibility tools, smart cars—even social media.

💡 Where I’m Taking This Next

This module opened up so many ideas. I could:

- Build an app that describes pictures aloud for the visually impaired

- Create a tool that flags inappropriate content in uploaded images

- Teach a chatbot to understand photos users upload

🧭 Your Turn

Want to try it yourself?

✅ Go to the Analyze Images module

✅ Use the Vision Studio (no code needed!)

✅ Show the AI your dog photos and see what it thinks 🐶

✨ Final Thoughts

Learning how AI sees the world has been humbling. We take for granted how easily our brains identify a cat, a car, or a coffee cup. But getting a machine to do that? It’s a whole new kind of creativity—and precision.

I’ve only just started scratching the surface of computer vision.

Next up in the AI Voyager journey: training AI to classify images (can it tell a muffin from a chihuahua?).

Can’t wait to share that one.

Until next week, keep exploring one pixel at a time.

– André Barnard, AI Explorer

Enjoyed the post? Share it!

Help others discover this content